Real-time Networking #

Mengapa jaringan begitu penting dalam komunikasi Real-time? #

Jaringan adalah faktor pembatas dalam komunikasi Real-time. Dalam dunia yang ideal kita akan memiliki bandwidth tak terbatas dan paket akan tiba secara instan. Namun ini tidak terjadi. Jaringan terbatas, dan kondisinya dapat berubah kapan saja. Mengukur dan mengamati kondisi jaringan juga merupakan masalah yang sulit. Anda bisa mendapatkan perilaku yang berbeda tergantung pada perangkat keras, perangkat lunak dan konfigurasinya.

Komunikasi real-time juga menghadirkan masalah yang tidak ada di sebagian besar domain lain. Untuk developer web, tidak fatal jika situs web Anda lebih lambat di beberapa jaringan. Selama semua data tiba, pengguna senang. Dengan WebRTC, jika data Anda terlambat, itu tidak berguna. Tidak ada yang peduli tentang apa yang dikatakan dalam panggilan konferensi 5 detik yang lalu. Jadi ketika mengembangkan sistem komunikasi realtime, Anda harus membuat trade-off. Apa batas waktu saya, dan berapa banyak data yang dapat saya kirim?

Bab ini mencakup konsep yang berlaku untuk komunikasi data dan media. Pada bab selanjutnya kita akan melampaui teorinya dan membahas bagaimana subsistem media dan data WebRTC menyelesaikan masalah ini.

Apa atribut jaringan yang membuatnya sulit? #

Kode yang efektif bekerja di semua jaringan adalah rumit. Anda memiliki banyak faktor berbeda, dan mereka semua dapat saling mempengaruhi secara halus. Ini adalah masalah paling umum yang akan dihadapi developer.

Bandwidth #

Bandwidth adalah laju maksimum data yang dapat ditransfer melintasi jalur tertentu. Penting untuk diingat ini juga bukan angka statis. Bandwidth akan berubah sepanjang rute seiring lebih banyak (atau lebih sedikit) orang menggunakannya.

Transmission Time dan Round Trip Time #

Transmission Time adalah berapa lama waktu yang dibutuhkan paket untuk tiba di tujuannya. Seperti Bandwidth ini tidak konstan. Transmission Time dapat berfluktuasi kapan saja.

transmission_time = receive_time - send_time

Untuk menghitung transmission time, Anda memerlukan jam pada pengirim dan penerima yang disinkronkan dengan presisi milidetik. Bahkan penyimpangan kecil akan menghasilkan pengukuran transmission time yang tidak dapat diandalkan. Karena WebRTC beroperasi di lingkungan yang sangat heterogen, hampir mustahil untuk mengandalkan sinkronisasi waktu sempurna antara host.

Pengukuran round-trip time adalah solusi untuk sinkronisasi jam yang tidak sempurna.

Alih-alih beroperasi pada jam terdistribusi, peer WebRTC mengirim paket khusus dengan timestamp-nya sendiri sendertime1.

Peer yang bekerja sama menerima paket dan memantulkan timestamp kembali ke pengirim.

Setelah pengirim asli mendapat waktu yang dipantulkan, ia mengurangi timestamp sendertime1 dari waktu saat ini sendertime2.

Delta waktu ini disebut “round-trip propagation delay” atau lebih umum round-trip time.

rtt = sendertime2 - sendertime1

Setengah dari round trip time dianggap sebagai perkiraan yang cukup baik dari transmission time. Solusi ini tidak tanpa kekurangan. Ini membuat asumsi bahwa dibutuhkan waktu yang sama untuk mengirim dan menerima paket. Namun pada jaringan seluler, operasi pengiriman dan penerimaan mungkin tidak simetris waktu. Anda mungkin telah memperhatikan bahwa kecepatan unggah pada ponsel Anda hampir selalu lebih rendah daripada kecepatan unduh.

transmission_time = rtt/2

Teknisnya pengukuran round-trip time dijelaskan lebih detail dalam bab RTCP Sender dan Receiver Reports.

Jitter #

Jitter adalah fakta bahwa Transmission Time dapat bervariasi untuk setiap paket. Paket Anda dapat ditunda, tetapi kemudian tiba dalam ledakan.

Packet Loss #

Packet Loss adalah ketika pesan hilang dalam transmisi. Kehilangan bisa stabil, atau bisa datang dalam lonjakan. Ini bisa karena jenis jaringan seperti satelit atau Wi-Fi. Atau bisa diperkenalkan oleh perangkat lunak di sepanjang jalan.

Maximum Transmission Unit #

Maximum Transmission Unit adalah batas seberapa besar satu paket tunggal bisa. Jaringan tidak mengizinkan Anda mengirim satu pesan raksasa. Pada level protokol, pesan mungkin harus dipecah menjadi beberapa paket yang lebih kecil.

MTU juga akan berbeda tergantung pada jalur jaringan apa yang Anda ambil. Anda dapat menggunakan protokol seperti Path MTU Discovery untuk mengetahui ukuran paket terbesar yang dapat Anda kirim.

Congestion #

Congestion adalah ketika batas jaringan telah tercapai. Ini biasanya karena Anda telah mencapai puncak bandwidth yang dapat ditangani rute saat ini. Atau bisa operator yang memaksakan seperti batas per jam yang dikonfigurasi ISP Anda.

Congestion menampakkan dirinya dalam banyak cara berbeda. Tidak ada perilaku standar. Dalam kebanyakan kasus ketika congestion tercapai jaringan akan menjatuhkan paket berlebih. Dalam kasus lain jaringan akan buffer. Ini akan menyebabkan Transmission Time untuk paket Anda meningkat. Anda juga dapat melihat lebih banyak jitter saat jaringan Anda menjadi padat. Ini adalah area yang berubah dengan cepat dan algoritma baru untuk deteksi congestion masih ditulis.

Dinamis #

Jaringan sangat dinamis dan kondisinya dapat berubah dengan cepat. Selama panggilan Anda dapat mengirim dan menerima ratusan ribu paket. Paket-paket itu akan melakukan perjalanan melalui beberapa hop. Hop tersebut akan dibagikan oleh jutaan pengguna lain. Bahkan di jaringan lokal Anda, Anda dapat memiliki film HD yang diunduh atau mungkin perangkat memutuskan untuk mengunduh pembaruan perangkat lunak.

Memiliki panggilan yang baik tidak sesederhana mengukur jaringan Anda saat startup. Anda perlu terus mengevaluasi. Anda juga perlu menangani semua perilaku berbeda yang berasal dari banyak perangkat keras dan perangkat lunak jaringan.

Menyelesaikan Packet Loss #

Menangani packet loss adalah masalah pertama untuk diselesaikan. Ada beberapa cara untuk menyelesaikannya, masing-masing dengan manfaatnya sendiri. Ini tergantung pada apa yang Anda kirim dan seberapa toleran Anda terhadap latensi. Penting juga untuk dicatat bahwa tidak semua packet loss fatal. Kehilangan beberapa video mungkin bukan masalah, mata manusia mungkin bahkan tidak mampu menganggapnya. Kehilangan pesan teks pengguna adalah fatal.

Katakanlah Anda mengirim 10 paket, dan paket 5 dan 6 hilang. Ini adalah cara Anda dapat menyelesaikannya.

Acknowledgments #

Acknowledgments adalah ketika penerima memberi tahu pengirim setiap paket yang telah mereka terima. Pengirim menyadari packet loss ketika ia mendapat acknowledgment

untuk paket dua kali yang bukan final. Ketika pengirim mendapat ACK untuk paket 4 dua kali, ia tahu bahwa paket 5 belum terlihat.

Selective Acknowledgments #

Selective Acknowledgments adalah peningkatan dari Acknowledgments. Penerima dapat mengirim SACK yang mengakui beberapa paket dan memberi tahu pengirim tentang kesenjangan.

Sekarang pengirim dapat mendapat SACK untuk paket 4 dan 7. Kemudian ia tahu ia perlu mengirim ulang paket 5 dan 6.

Negative Acknowledgments #

Negative Acknowledgments menyelesaikan masalah dengan cara sebaliknya. Alih-alih memberi tahu pengirim apa yang telah diterimanya, penerima memberi tahu pengirim apa yang telah hilang. Dalam kasus kami NACK

akan dikirim untuk paket 5 dan 6. Pengirim hanya mengetahui paket yang ingin dikirim ulang oleh penerima.

Forward Error Correction #

Forward Error Correction memperbaiki packet loss secara pre-emptif. Pengirim mengirim data redundan, yang berarti paket yang hilang tidak mempengaruhi stream akhir. Salah satu algoritma populer untuk ini adalah koreksi kesalahan Reed–Solomon.

Ini mengurangi latensi/kompleksitas pengiriman dan penanganan Acknowledgments. Forward Error Correction adalah pemborosan bandwidth jika jaringan tempat Anda berada memiliki nol kehilangan.

Menyelesaikan Jitter #

Jitter hadir di sebagian besar jaringan. Bahkan di dalam LAN Anda memiliki banyak perangkat yang mengirim data pada tingkat yang berfluktuasi. Anda dapat dengan mudah mengamati jitter dengan melakukan ping perangkat lain dengan perintah ping dan memperhatikan fluktuasi dalam latensi round-trip.

Untuk menyelesaikan jitter, klien menggunakan JitterBuffer. JitterBuffer memastikan waktu pengiriman paket yang stabil. Kelemahannya adalah JitterBuffer menambahkan beberapa latensi ke paket yang tiba lebih awal. Sisi baiknya adalah paket yang terlambat tidak menyebabkan jitter. Bayangkan bahwa selama panggilan, Anda melihat waktu kedatangan paket berikut:

* time=1.46 ms

* time=1.93 ms

* time=1.57 ms

* time=1.55 ms

* time=1.54 ms

* time=1.72 ms

* time=1.45 ms

* time=1.73 ms

* time=1.80 ms

Dalam kasus ini, sekitar 1,8 ms akan menjadi pilihan yang baik. Paket yang tiba terlambat akan menggunakan jendela latensi kami. Paket yang tiba lebih awal akan ditunda sedikit dan dapat mengisi jendela yang habis oleh paket yang terlambat. Ini berarti kita tidak lagi memiliki gagap dan memberikan tingkat pengiriman yang lancar untuk klien.

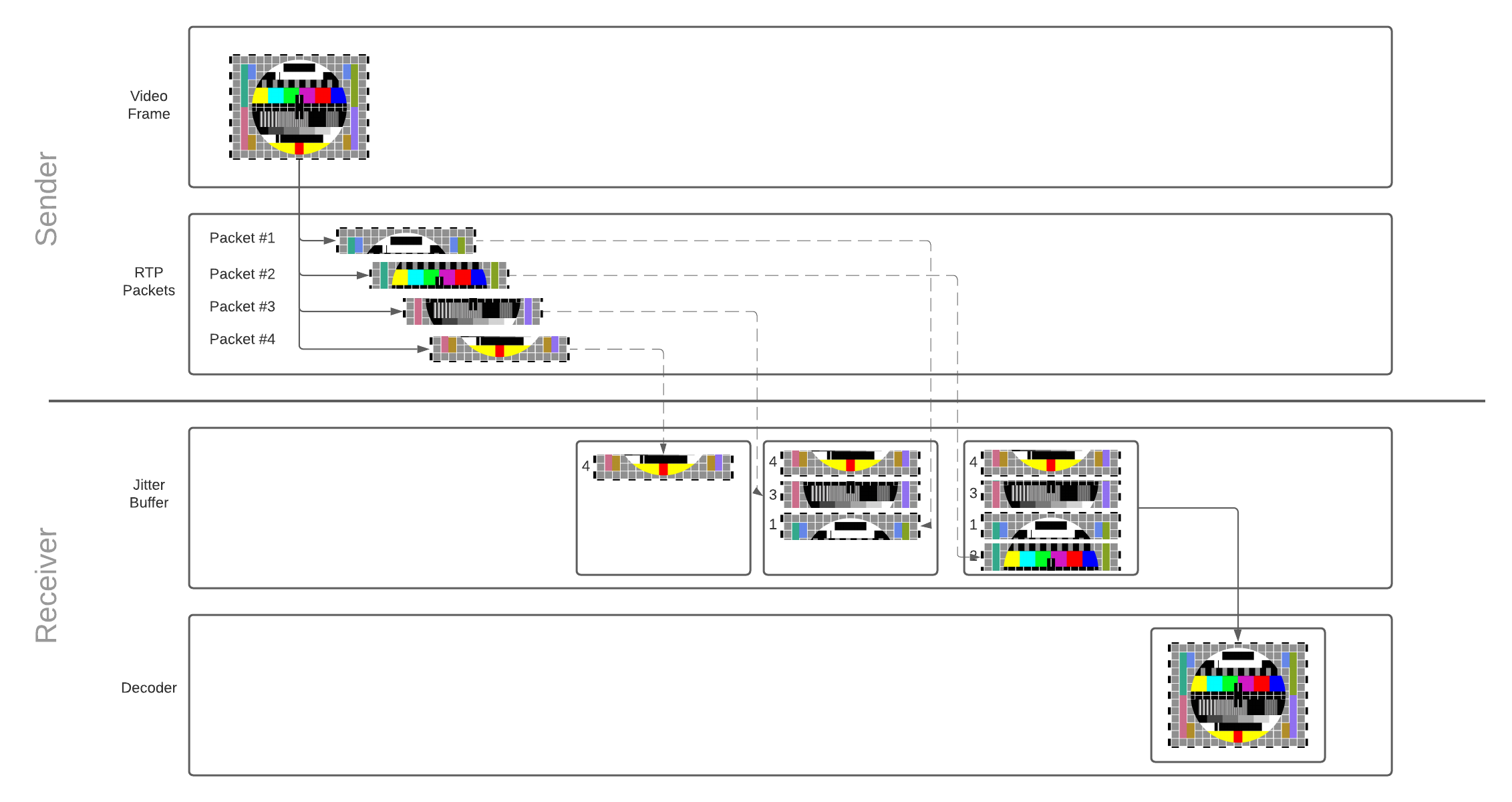

Operasi JitterBuffer #

Setiap paket ditambahkan ke jitter buffer segera setelah diterima. Setelah ada cukup paket untuk merekonstruksi frame, paket yang membentuk frame dilepaskan dari buffer dan dikeluarkan untuk dekoding. Decoder, pada gilirannya, mendekode dan menggambar frame video di layar pengguna. Karena jitter buffer memiliki kapasitas terbatas, paket yang tetap di buffer terlalu lama akan dibuang.

Baca lebih lanjut tentang bagaimana frame video dikonversi menjadi paket RTP, dan mengapa rekonstruksi diperlukan dalam bab komunikasi media.

jitterBufferDelay memberikan wawasan yang baik tentang kinerja jaringan Anda dan pengaruhnya pada kelancaran pemutaran.

Ini adalah bagian dari WebRTC statistics API yang relevan dengan stream masuk penerima.

Penundaan mendefinisikan jumlah waktu frame video menghabiskan di jitter buffer sebelum dikeluarkan untuk dekoding.

Penundaan jitter buffer yang panjang berarti jaringan Anda sangat padat.

Mendeteksi Congestion #

Sebelum kita dapat menyelesaikan congestion, kita perlu mendeteksinya. Untuk mendeteksinya kita menggunakan congestion controller. Ini adalah subjek yang rumit, dan masih berubah dengan cepat. Algoritma baru masih diterbitkan dan diuji. Pada level tinggi mereka semua beroperasi sama. Congestion controller memberikan estimasi bandwidth yang diberikan beberapa input. Ini beberapa input yang mungkin:

- Packet Loss - Paket dijatuhkan saat jaringan menjadi padat.

- Jitter - Saat peralatan jaringan menjadi lebih kelebihan beban, antrian paket akan menyebabkan waktu menjadi tidak menentu.

- Round Trip Time - Paket membutuhkan waktu lebih lama untuk tiba ketika padat. Tidak seperti jitter, Round Trip Time terus meningkat.

- Explicit Congestion Notification - Jaringan yang lebih baru dapat menandai paket sebagai berisiko dijatuhkan untuk mengurangi congestion.

Nilai-nilai ini perlu diukur terus-menerus selama panggilan. Pemanfaatan jaringan dapat meningkat atau menurun, sehingga bandwidth yang tersedia dapat terus berubah.

Menyelesaikan Congestion #

Sekarang kita memiliki estimasi bandwidth kita perlu menyesuaikan apa yang kita kirim. Bagaimana kita menyesuaikan tergantung pada jenis data apa yang ingin kita kirim.

Mengirim Lebih Lambat #

Membatasi kecepatan di mana Anda mengirim data adalah solusi pertama untuk mencegah congestion. Congestion Controller memberi Anda estimasi, dan itu adalah tanggung jawab pengirim untuk membatasi laju.

Ini adalah metode yang digunakan untuk sebagian besar komunikasi data. Dengan protokol seperti TCP ini semua dilakukan oleh sistem operasi dan sepenuhnya transparan bagi pengguna dan developer.

Mengirim Lebih Sedikit #

Dalam beberapa kasus kita dapat mengirim lebih sedikit informasi untuk memenuhi batas kita. Kita juga memiliki tenggat waktu keras untuk kedatangan data kita, jadi kita tidak bisa mengirim lebih lambat. Ini adalah kendala yang dimiliki media Real-time.

Jika kita tidak memiliki cukup bandwidth yang tersedia, kita dapat menurunkan kualitas video yang kita kirim. Ini memerlukan feedback loop yang ketat antara video encoder dan congestion controller Anda.